Duration – 4 Hours

Our sincere apologies for the length of the outage reported today, we always strive to give our customers the best possible service and we failed today to identify and resolve the issue quickly enough.

What Happened?

From approximately 08:30 on Thursday 30th December our services started reporting connectivity issues. These increased in severity until at approx 09:15 it was not possible to connect to any of our services. At this point our main network connections were reporting a network cable unplugged error.

The issue was restricted to wider network access and at no time was data loss an issue, the services continued to run successfully and data integrity was retained.

Full service was restored at approximately 13:30.

Why Did This Happen?

A network switch was reporting spikes in packets sent by our services and eventually hit the maximum threshold for packet traffic within 30 minutes. This activated the StormGuard feature of the network which helps to prevent malicious traffic taking over the network by isolating the offending section of the network.

No corresponding increase in traffic was reported and general network traffic generated by our services was within normal limits.

The switch was reset but would repeatedly trigger StormGuard and block access to our services.

What Did We Do?

Initially we requested that all cabling was checked, and this was found to be in place and seemingly active. We then checked our network drivers and reset all connections. It was suspected at this point that there may be a hardware failure of our own network cards and so a new server with new network cards was provisioned in case we needed to move data if we could not restore connections.

Whilst the new server was provisioned we started to audit connection points further up the network chain, that are out of our direct control, with our provider. Eventually a network switch was identified as blocking traffic from our servers.

Once the switch was identified as the cause of the outage, but no corresponding issue was found on the servers we requested that the network switch was switched to its redundant backup partner.

The switch is a deep level switch shared by other independent services and so the switch to the redundant partner takes time to effect.

Once the partner switch was brought online and the block removed all services were restored and all data fully available.

What Are We Still Doing?

We are monitoring network traffic between our servers and the switch and have instigated an audit of the server logs and access to rule out any possibility of malicious programs or access.

What Could Have Prevented This Issue and What Now?

We did not identify the ultimate cause quickly enough. The server interface does not include any indication of this block that is applied further upstream. Our service provider has already prepared some guidance on how to identify this issue in the future and is looking at ways to include StormGuard notifications in the user interface.

This issue was not caused by a bug or misconfiguration but a hardware failure. We could not have prevented the hardware failure, they will happen eventually, but as we were not aware of the potentially blocking actions of StormGuard it took us longer than necessary to restore access.

We will be updating our disaster recovery procedure to include making checks on upstream switches and other network hardware in the case that there appears to be network hardware issues with our servers.

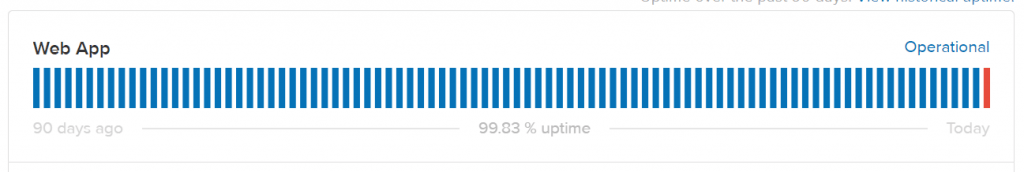

We have had very few outages in our history. This is the first major outage that affected all customers and was persistent for more than a few minutes. Our last unexpected downtime was reported on March 20th 2021 and lasted for less than 3 minutes.

You can view our current and historical status report at https://teamkinetic.statuspage.io/

Leave a Reply